How do I raise the use of Generative Artificial Intelligence (GAI) tools, like Chat GPT, with my students?

Generative Artificial Intelligence (AI) has received much attention across higher education. Instructors and students alike are curious and concerned about its use. This resource outlines an approach instructors may consider in preparing to talk to their students about AI.

Generative AI refers to technology that can create new, original content, such as images and text, based on examples it has been trained on from existing data. Regardless of whether you permit students to use AI text generation tools, you should talk to your students about the limitations and possibilities of these tools as well as their ethical use.

When discussing generative AI with students, you should consider:

1. What do your students know about AI and academic integrity?

Talk to your students about academic integrity as it relates to tools such as ChatGPT. Have a full conversation so your students are clear on your expectations, as your expectations on AI use may differ from those of other instructors.

If you are allowing the use of generative AI in your class, ensure students are directed toward resources on citing AI-generated content.

If you are not allowing students to use generative AI, explain that although Memorial University’s policies on academic integrity does not explicitly reference generative AI, submitting AI-generated work without proper attribution can be considered plagiarism under the University’s regulations on academic misconduct (6.12.4).

2. What are the ethical considerations of generative AI?

AI may facilitate more equitable learning and help democratize education by putting resources in the hands of more students (Mehta, 2023). While increased accessibility can benefit students (Mollick & Mollick, 2023), other ethical impacts should be considered.

Some people feel there is no ethical way to use AI generators given that they are often trained on illegally used content under copyright law (Crabapple, 2023). Other concerns include the promotion of harmful stereotypes in AI-generated content (Wu, 2022), as well as OpenAI’s use of exploitative labour practices (Perrigo, 2023) and wasteful extraction of environmental resources (Crawford, 2021).

Considering these potential harms, some students may not wish to use generative AI tools. Additionally, some AI tools are not fully accessible globally and may have paid subscription models. As such, instructors may want to avoid requiring students to use these tools for assessment purposes.

3. What is the relationship between writing, research, and AI in your discipline?

As generative AI continues to make strides, its impact on various academic fields becomes increasingly evident. Consider how AI-generated content and tools might intersect with traditional research and writing practices in your specific discipline.

Some researchers feel generative AI tools like ChatGPT will streamline academic publishing and help researchers produce better, more efficient writing (Alkhaqani, 2023; Salvagno, et al., 2023). However, it is important to remember that writing is not just a means of communicating research; it is a fundamental process that enhances critical thinking and allows researchers to refine their thoughts and theories.

With your students, share how scholars in your discipline use the writing process to further their thinking.

4. Why might students rely on generative AI?

Students use generative AI for a number of reasons (Brew et al., 2023). Although little research exists on why students use tools like ChatGPT, this is a growing area of interest for scholars.

There is research to suggest that university students may engage in academic misconduct when they feel academically underprepared and when they are over-scheduled with other work and leisure activities (Yu et al, 2018). To help mitigate these causes, ensure students know what academic supports are available to them, such as the Writing Centre and Academic Success Centre.

Additionally, students may be less likely to submit AI-generated content as their own work if they have ample time and support to complete assignments on their own. Consider assigning multiple steps in the writing process (prewriting, outlines, drafts, and revisions) and holding conferences with students to discuss their progress. You could also flip your classroom and have students begin an essay in class that they will continue on their own.

Questions to Start the Conversation

Your conversation should offer clarity to students, center students and their experiences, and provide room for reflection. Below are some questions to help start the conversation.

Ask your students:

- What is AI and how does it work?

- Have you used AI tools before? For what purposes?

- Is it cheating to use AI tools to help complete your school work? Why or why not?

- Why are students often required to write essays in university?

- What role might AI tools play in your professional life?

- Can you tell when text has been written by ChatGPT?

- What questions do you have about generative AI?

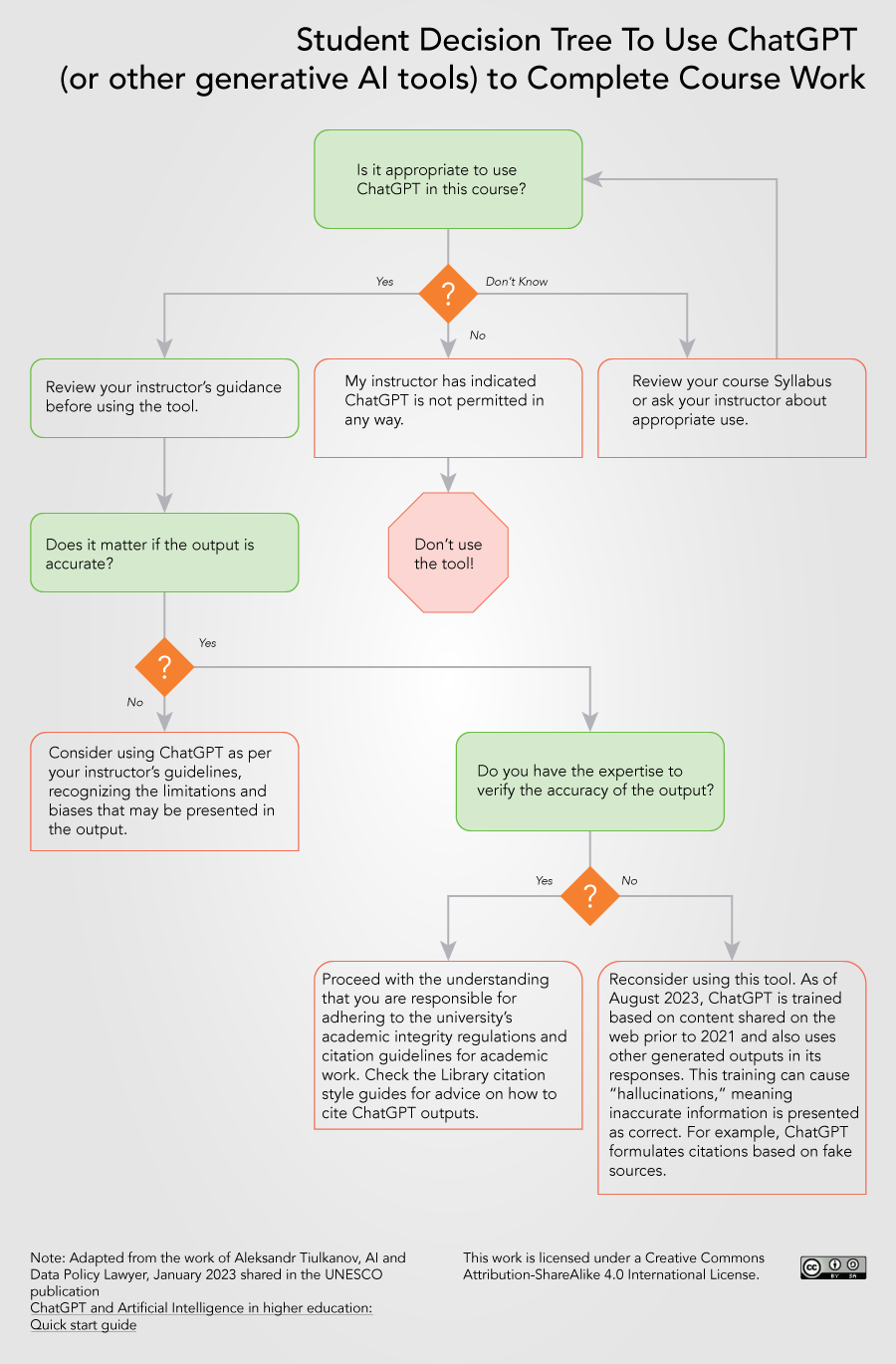

To use ChatGPT or not: A student decision tree

The following decision tree provides some high level guidance to students when considering using ChatGPT in their course work. It may help to inform your conversation with them as well. A downloadable PDF version is also available should you wish to share this with your class.

References

- Alkhaqani, A. L. (2023). Can ChatGPT help researchers with scientific research writing. Journal Of Medical Research And Reviews, 1(1), 9-12. https://www.researchgate.net/profile/Ahmed-Alkhaqani/publication/372717673_Can_ChatGPT_Help_Researchers_with_Scientific_Research_Writing/links/64c3d34c6f28555d86dad613/Can-ChatGPT-Help-Researchers-with-Scientific-Research-Writing.pdf

- Bender, E. M., Gebru, T., McMillan-Major, A., & Mitchell, S. (2021). On the dangers of stochastic parrots: Can language models be too big? In Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency (pp. 610-623). Association for Computing Machinery. https://doi.org/10.1145/3442188.3445922

- Brew, M., Taylor, S., Lam, R., Havemann, L., & Nerantzi, C. (2023). Towards developing AI literacy: Three student provocations on AI in higher education. Asian Journal of Distance Education, 18 (2), 1-11. http://www.asianjde.com/ojs/index.php/AsianJDE/article/view/726

- Crabapple, M. (2023, May 2). Restrict AI illustration from publishing: An open letter. Center for artistic inquiry and reporting. https://artisticinquiry.org/AI-Open-Letter

- Crawford, K. (2021). Atlas of AI: Power, politics, and the planetary costs of artificial intelligence. Yale University Press.

- Mehta, R. (2023, April 14). Banning ChatGPT will do more harm than good. MIT technology review. https://www.technologyreview.com/2023/04/14/1071194/chatgpt-ai-high-school-education-first-person/T will do more harm than good | MIT Technology Review

- Mollick, E. R., & Mollick, L. (2023, March 17). Using AI to implement effective teaching strategies in classrooms: Five strategies, including prompts. http://dx.doi.org/10.2139/ssrn.4391243

- Perrigo, B. (2023, January 18). OpenAI used Kenyan workers on less than $2 per hour. Time. https://time.com/6247678/openai-chatgpt-kenya-workers/

- Salvagno, M., Taccone, F. S., & Gerli, A. G. (2023). Can artificial intelligence help for scientific writing? Critical care, 27(1), 1-5. https://doi.org/10.1186/s13054-023-04380-2

- UNESCO-IESALC (2023). ChatGPT and artificial intelligence in higher education: Quick start guide. https://www.iesalc.unesco.org/wp-content/uploads/2023/04/ChatGPT-and-Artificial-Intelligence-in-higher-education-Quick-Start-guide_EN_FINAL.pdf

- Wu, G. (2022, December 22). 5 big Problems with OpenAI’s ChatGPT. Make use of. 8 Big Problems With OpenAI’s ChatGPT (makeuseof.com)

- Yu, H., Glanzer, P. L., Johnson, B. R., Sriram, R., & Moore, B. (2018). Why college students cheat: A conceptual model of five factors. The Review of Higher Education, 41(4), 549-576. https://doi.org/10.1353/rhe.2018.0025

Originally Published: August 22, 2023